🔴 CRITICAL WARNING: Evaluation Artifact – NOT Peer-Reviewed Science. This document is 100% AI-Generated Synthetic Content. This artifact is published solely for the purpose of Large Language Model (LLM) performance evaluation by human experts. The content has NOT been fact-checked, verified, or peer-reviewed. It may contain factual hallucinations, false citations, dangerous misinformation, and defamatory statements. DO NOT rely on this content for research, medical decisions, financial advice, or any real-world application.

Read the AI-Generated Article

Abstract

The convergence of artificial intelligence (AI) and operations research (OR) represents a paradigm shift in decision support systems, moving beyond the traditional objective of accelerating solver performance toward fundamentally reimagining how optimization problems are formulated, structured, and connected to real-world decision-making processes. This article presents a comprehensive methodological framework for AI-OR integration that emphasizes two complementary directions: decision-focused learning, which aligns predictive models directly with downstream optimization objectives, and language model-assisted problem formulation, which leverages large language models to bridge the gap between informal requirements and formal mathematical structures. We describe detailed protocols for implementing these methods, including mathematical formulations, algorithmic procedures, and integration strategies. The proposed framework incorporates validation approaches through synthetic benchmarks and real-world case studies, demonstrating improvements in decision quality, problem formulation efficiency, and human-machine collaboration. Our methodology addresses key challenges in hybrid algorithm design, including differentiability requirements, constraint handling, and the orchestration of symbolic reasoning with data-driven learning. The framework provides researchers and practitioners with structured approaches to develop more flexible, data-aware, and human-in-the-loop optimization workflows that maintain the rigor of mathematical programming while exploiting the representational power of modern machine learning.

Introduction

Operations research has long provided the mathematical foundations for structured decision-making across domains ranging from supply chain management and resource allocation to scheduling and network design [1]. Traditional OR methodologies rely on explicitly formulated mathematical programs—linear, integer, nonlinear, or stochastic—that encode objectives, constraints, and decision variables in precise formal languages [2]. These methods have achieved remarkable success when problem structures are well-understood and parameters are known or can be estimated with confidence [3].

However, contemporary decision environments present challenges that strain the classical OR paradigm. First, many real-world systems generate vast quantities of data that contain implicit information about objectives, constraints, and uncertainties, yet translating this data into formal optimization models remains largely a manual, expertise-intensive process [4]. Second, the traditional two-stage workflow—where prediction models are trained independently and their outputs subsequently fed into optimization solvers—can lead to suboptimal decisions because prediction accuracy does not necessarily align with decision quality [5], [6]. Third, problem formulation itself, arguably the most critical and difficult step in applied optimization, requires deep domain expertise and mathematical sophistication, creating barriers to OR adoption [7].

Artificial intelligence and machine learning offer complementary capabilities that can address these limitations. Rather than viewing AI as merely a means to accelerate solver performance—though neural network-based heuristics have shown promise in this area [8]—recent research emphasizes using AI to improve how optimization problems are posed, parameterized, and connected to organizational decision-making [9]. This perspective shift recognizes that the bottleneck in many applications is not computational speed but rather the cognitive and technical challenges of formulation and the misalignment between predictive objectives and decision outcomes [10].

Decision-Focused Learning

Decision-focused learning (DFL), also termed predict-then-optimize or prescriptive analytics, represents a fundamental reconceptualization of the relationship between prediction and optimization [11]. Instead of training predictive models to minimize statistical loss functions (e.g., mean squared error) that are agnostic to how predictions will be used, DFL trains models to minimize the cost of decisions made using those predictions [12]. This approach acknowledges that in many applications, we do not care about prediction accuracy per se, but rather about making good decisions based on those predictions [13].

Consider a canonical example from operations management: predicting customer demand to inform inventory decisions. A traditional approach trains a demand forecasting model to minimize prediction error, then uses those forecasts in an inventory optimization model. However, slight forecasting errors that lead to overstocking have different cost implications than those leading to understocking, yet standard loss functions treat them symmetrically [14]. Decision-focused learning directly optimizes the inventory costs resulting from the forecast-informed decisions, automatically learning which prediction errors matter most [15].

Language Models for Problem Formulation

Large language models (LLMs) trained on diverse corpora including mathematical texts, programming code, and natural language have demonstrated surprising capabilities in symbolic reasoning and code generation [16]. Recent research explores whether these models can assist in operations research problem formulation—a task that requires translating stakeholder requirements, domain constraints, and business objectives into mathematical notation [17]. This capability could democratize access to optimization by reducing the specialized expertise required and accelerating the formulation process [18].

Early investigations suggest that LLMs can perform several formulation-related tasks: generating decision variables from problem descriptions, proposing constraint structures based on domain knowledge, translating verbal requirements into algebraic expressions, and even suggesting appropriate model types (linear vs. integer vs. nonlinear) based on problem characteristics [19]. While these models do not replace human expertise, they can serve as intelligent assistants that propose initial formulations, identify missing constraints, or translate between stakeholder language and mathematical notation [20].

Objectives and Contributions

This article presents a methodological framework for integrating AI and OR in decision support systems, with three primary contributions. First, we provide detailed mathematical formulations and algorithmic procedures for decision-focused learning, including approaches to handle the non-differentiability challenges that arise when optimization problems contain discrete decisions. Second, we describe protocols for leveraging large language models in problem formulation workflows, including prompt engineering strategies, validation mechanisms, and human-in-the-loop refinement processes. Third, we present a unified architectural framework for hybrid AI-OR systems that orchestrates these components while maintaining mathematical rigor and interpretability.

The remainder of this article is organized as follows. Section II describes the core methodological components, including decision-focused learning frameworks, LLM-assisted formulation protocols, and hybrid system architectures. Section III presents validation approaches through experimental setups, synthetic benchmarks, and case study methodologies. Section IV discusses advantages, limitations, computational considerations, and integration challenges. Section V concludes with implications for research and practice.

Method Description

Decision-Focused Learning Framework

We begin by formalizing the decision-focused learning paradigm and presenting methods to implement it in practice. Consider a general setting where we must make decisions based on uncertain parameters that can be predicted from observable features.

Mathematical Formulation

Let ![]() represent observable features,

represent observable features, ![]() represent uncertain cost coefficients, and

represent uncertain cost coefficients, and ![]() represent decisions from a feasible set

represent decisions from a feasible set ![]() . The decision-making process involves two stages:

. The decision-making process involves two stages:

-

Prediction:

Estimate

using a parametric model

using a parametric model  with parameters

with parameters  .

.

-

Optimization:

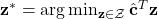

Solve for the optimal decision given the prediction:

(1)

(1)

In traditional two-stage approaches, the prediction model is trained to minimize a statistical loss between predictions and ground truth:

where ![]() is a standard loss function such as mean squared error. Decision-focused learning instead directly minimizes the decision cost:

is a standard loss function such as mean squared error. Decision-focused learning instead directly minimizes the decision cost:

where ![]() is the optimal solution to Eq. (1) given prediction

is the optimal solution to Eq. (1) given prediction ![]() . This objective evaluates predictions based on true costs

. This objective evaluates predictions based on true costs ![]() applied to decisions

applied to decisions ![]() made using predictions

made using predictions ![]() [21].

[21].

Gradient-Based Training

The central challenge in implementing decision-focused learning is computing gradients of the objective in Eq. (3) with respect to model parameters ![]() . This requires differentiating through the optimization problem in Eq. (1), which is non-trivial because

. This requires differentiating through the optimization problem in Eq. (1), which is non-trivial because ![]() operations are typically non-differentiable, especially when

operations are typically non-differentiable, especially when ![]() contains discrete elements [22].

contains discrete elements [22].

Several approaches address this challenge:

Implicit Differentiation for Continuous Problems:

When Eq. (1) is a convex program with strong duality and the solution is unique, we can apply implicit differentiation using the Karush-Kuhn-Tucker (KKT) conditions. Let ![]() and

and ![]() represent the primal and dual optimal solutions. The gradient can be computed as:

represent the primal and dual optimal solutions. The gradient can be computed as:

where ![]() is the Lagrangian of the optimization problem [23]. This approach is implemented in differentiable optimization libraries such as cvxpylayers and qpth [24].

is the Lagrangian of the optimization problem [23]. This approach is implemented in differentiable optimization libraries such as cvxpylayers and qpth [24].

Perturbation-Based Methods: For problems where implicit differentiation is not applicable, perturbation techniques provide an alternative. The Fenchel-Young loss framework introduces a strongly convex regularization term to the optimization problem, making the solution smooth and differentiable [25]. The regularized problem becomes:

where ![]() is a regularization term and

is a regularization term and ![]() controls smoothing strength. For appropriate choices of

controls smoothing strength. For appropriate choices of ![]() , the gradient exists and can be computed efficiently [26].

, the gradient exists and can be computed efficiently [26].

Stochastic Smoothing: Another class of methods approximates discrete optimization problems with continuous relaxations that admit gradient estimation. Randomized smoothing techniques add noise to the objective and estimate gradients via perturbation analysis [27]. The Gumbel-softmax trick and related methods have been adapted for combinatorial optimization contexts [28].

Algorithm for Decision-Focused Learning

We present a general algorithm for training decision-focused models:

Algorithm 1: Decision-Focused Learning Training

Input: Dataset D = {(x_i, c_i)}_{i=1}^N, prediction model f_θ,

optimization problem structure, learning rate α, epochs T

1. Initialize model parameters θ

2. For epoch t = 1 to T:

3. For each batch B ⊂ D:

4. For each (x, c) in B:

5. ĉ ← f_θ(x) // Forward prediction

6. z* ← solve_optimization(ĉ) // Solve optimization

7. L ← c^T z* // Compute true cost

8. ∇θ ← compute_gradient(L, θ) // Backpropagate through optimization

9. θ ← θ - α∇θ // Update parameters

10. Return θ

Subroutine: compute_gradient(L, θ)

Use one of: implicit differentiation (Eq. 4),

perturbation methods (Eq. 5), or stochastic smoothing

to compute ∂L/∂θ

The critical subroutine

compute_gradient

implements one of the differentiability-enabling techniques described above, chosen based on problem structure and computational resources [29].

LLM-Assisted Problem Formulation

The second pillar of our methodology leverages large language models to assist in translating informal problem descriptions into formal mathematical programs. This capability can significantly reduce the expertise barrier and time required for formulation [30].

Formulation Workflow

We propose a structured workflow for LLM-assisted formulation consisting of five stages:

- Problem Elicitation: Stakeholders provide a natural language description of the decision problem, including objectives, constraints, and relevant domain information.

- Structured Decomposition: The LLM decomposes the problem into standard OR components: decision variables, objective function, constraints, and parameters. This stage uses carefully designed prompts that guide the model through a systematic analysis.

- Mathematical Translation: The LLM generates formal mathematical notation for each component, producing a complete problem formulation in standard notation (e.g., AMPL, Pyomo, or directly in mathematical form).

- Validation and Refinement: A human expert reviews the formulation for correctness, completeness, and alignment with stakeholder intent. Automated validation checks test for common issues (e.g., unbounded variables, infeasibility).

- Iterative Improvement: Based on validation feedback, the formulation is refined through iterative interaction between the LLM, automated validators, and human experts.

Prompt Engineering for Formulation

Effective LLM-assisted formulation requires carefully designed prompts that provide context, structure, and examples. We employ a few-shot learning approach where the prompt includes:

- Role specification defining the LLM as an operations research expert

- Task description outlining the formulation objective

- Format specification indicating desired output structure

- Few-shot examples showing problem descriptions paired with correct formulations

- Step-by-step reasoning instructions encouraging systematic decomposition

A template prompt structure is:

You are an expert in operations research and mathematical optimization. Your task is to translate the following problem description into a formal mathematical program. Problem Description: [Natural language description] Please provide: 1. Decision Variables: Define each variable with its domain and meaning 2. Objective Function: Express mathematically what should be minimized or maximized 3. Constraints: List all constraints in mathematical notation 4. Parameters: Identify all parameters and their interpretations Use the following format for your formulation: [Show example format] Think through the problem step-by-step before providing the final formulation.

Validation Mechanisms

LLM outputs require rigorous validation because language models can produce syntactically correct but semantically incorrect formulations [31]. We implement multi-level validation:

Syntactic Validation: Automated parsing checks that the formulation follows valid mathematical syntax and can be instantiated in an algebraic modeling language. This catches obvious errors like undefined variables or malformed expressions.

Consistency Checking: Dimensional analysis verifies that units are consistent across equations. Automated solvers test feasibility with simple parameter values to ensure the constraint set is non-empty.

Semantic Review: Human experts assess whether the formulation captures the intended problem logic. This step involves checking that constraints properly encode business rules and that the objective aligns with stated goals.

Test Case Validation: When available, the formulation is tested on instances with known optimal solutions or behavior to verify correctness [32].

Hybrid AI-OR System Architecture

Integrating decision-focused learning and LLM-assisted formulation requires a coherent system architecture that orchestrates data pipelines, model training, optimization solving, and human interaction. We present a modular framework designed for flexibility and extensibility.

Architectural Components

The hybrid system comprises five primary modules:

Formulation Module: Interfaces with stakeholders to elicit problem requirements, leverages LLMs to generate candidate formulations, and manages the validation and refinement process. Outputs a validated mathematical program specification.

Data Integration Module: Connects to organizational data sources, performs feature engineering, and prepares training datasets for predictive models. Handles data preprocessing, missing value imputation, and feature selection.

Predictive Learning Module: Implements decision-focused learning algorithms to train models that predict uncertain parameters. Includes neural network architectures, training loops with optimization-layer backpropagation, and hyperparameter tuning.

Optimization Solver Module: Provides interfaces to multiple optimization solvers (linear programming, mixed-integer programming, nonlinear programming) with automatic solver selection based on problem characteristics. Implements both exact and heuristic methods.

Decision Support Interface: Presents results to decision-makers with explanations, sensitivity analyses, and what-if scenario exploration tools. Supports interactive refinement of problem formulations and model parameters.

[Conceptual Diagram: System Architecture]

┌─────────────────────────────────────────────────────────────┐

│ Decision Support Interface │

│ (Visualization, Explanation, What-if Analysis) │

└─────────────────────┬───────────────────────────────────────┘

│

┌───────────────┼───────────────┐

│ │ │

┌─────▼──────┐ ┌────▼─────┐ ┌─────▼──────┐

│ Formulation│ │Predictive│ │Optimization│

│ Module │ │ Learning │ │ Solver │

│ │ │ Module │ │ Module │

│ (LLM-based)│ │ (DFL) │ │ (Multiple │

│ │ │ │ │ Solvers) │

└─────┬──────┘ └────┬─────┘ └─────┬──────┘

│ │ │

└──────┬───────┴───────┬──────┘

│ │

┌────▼───────────────▼────┐

│ Data Integration Module │

│ (ETL, Features, DB) │

└──────────────────────────┘

Integration Protocols

Seamless integration across modules requires standardized interfaces and data formats. We adopt the following protocols:

Formulation Representation: Mathematical programs are represented in a structured JSON format that includes decision variables with types and bounds, objective function as an expression tree, constraints as a list of inequalities and equalities, and parameter specifications. This representation is agnostic to specific modeling languages and can be translated to AMPL, Pyomo, GAMS, or solver-specific formats [33].

Prediction Interface: The predictive learning module exposes a standardized API that accepts feature vectors and returns parameter predictions along with uncertainty estimates (e.g., prediction intervals or probability distributions). This allows the optimization module to incorporate uncertainty in robust or stochastic programming frameworks [34].

Solver Abstraction: The optimization solver module implements an adapter pattern that provides a unified interface to heterogeneous solvers. Problem characteristics (linearity, convexity, problem size) automatically determine solver selection, with fallback mechanisms for challenging instances.

Feedback Loops: The architecture supports closed-loop learning where deployed decisions generate new data that continuously improves predictive models. Performance monitoring tracks decision quality over time and triggers model retraining when prediction performance degrades [35].

Human-in-the-Loop Integration

While automation reduces manual effort, human expertise remains essential for judgment, validation, and handling edge cases. Our framework explicitly incorporates human interaction points:

- Formulation review and approval before deployment

- Override mechanisms allowing experts to modify constraints or objectives

- Explanation interfaces that communicate why certain decisions were recommended

- Active learning prompts that request human labels for uncertain predictions

- Feedback collection on decision outcomes to improve models

This human-in-the-loop approach balances automation benefits with the irreplaceable value of domain expertise and contextual judgment [36].

Validation and Comparison

Experimental Methodology

Validating hybrid AI-OR methodologies requires evaluation across multiple dimensions: decision quality, computational efficiency, formulation accuracy, and usability. We describe experimental frameworks for assessing each component of the proposed methodology.

Decision-Focused Learning Validation

We validate decision-focused learning through controlled experiments comparing it against traditional two-stage approaches. The experimental protocol follows these steps:

- Benchmark Selection: Choose canonical optimization problems with well-defined parameter uncertainty (e.g., portfolio optimization with uncertain returns, newsvendor inventory with uncertain demand, shortest path with uncertain costs).

-

Data Generation:

Create synthetic datasets where features

and uncertain parameters

and uncertain parameters  have known relationships, allowing ground truth evaluation. For realism, relationships include non-linearity and noise.

have known relationships, allowing ground truth evaluation. For realism, relationships include non-linearity and noise.

- Baseline Comparison: Train baseline models using standard loss functions (MSE, MAE) in the two-stage paradigm. Compare against decision-focused models trained via Eq. (3).

- Evaluation Metrics: Measure both prediction accuracy (RMSE, MAE) and decision quality (true objective value achieved, regret compared to optimal decisions with perfect information).

- Statistical Testing: Perform paired statistical tests (e.g., Wilcoxon signed-rank) across multiple problem instances to assess significance of performance differences.

Performance Metrics

We employ multiple metrics to comprehensively assess performance:

Decision Regret: The primary metric for decision-focused learning is regret, defined as the difference between the cost achieved by decisions based on predictions versus decisions with perfect information:

Lower regret indicates better alignment between predictions and decision quality [37].

Prediction Accuracy: Standard metrics like root mean squared error (RMSE) and mean absolute error (MAE) measure prediction quality in isolation, allowing comparison with traditional methods:

Computational Efficiency: Training time, inference time, and memory consumption are measured to assess practical scalability. We report wall-clock time and computational complexity.

Solution Quality Gap: For problems with known optimal solutions, we measure the optimality gap:

Case Studies

We present three case study domains demonstrating the methodology's applicability across diverse problem types.

Case Study 1: Energy Portfolio Optimization

Consider an energy company that must decide daily how much electricity to purchase from various sources (spot market, long-term contracts, renewable generation) to meet uncertain demand while minimizing cost [38]. Traditional approaches predict demand independently using time series models, then optimize the portfolio given those predictions.

Problem Formulation:

Let ![]() represent the quantity purchased from source

represent the quantity purchased from source ![]() with per-unit cost

with per-unit cost ![]() , and let

, and let ![]() represent demand. The optimization problem is:

represent demand. The optimization problem is:

subject to capacity and minimum purchase constraints, where ![]() is the penalty for shortage and

is the penalty for shortage and ![]() is the penalty for excess. Demand

is the penalty for excess. Demand ![]() is uncertain and predicted from features like temperature, day of week, and historical usage patterns.

is uncertain and predicted from features like temperature, day of week, and historical usage patterns.

Decision-Focused Implementation:

A neural network ![]() predicts demand

predicts demand ![]() from features

from features ![]() . Training minimizes the true cost in Eq. (9) using true demand

. Training minimizes the true cost in Eq. (9) using true demand ![]() and decisions

and decisions ![]() based on predicted demand. Gradients are computed using implicit differentiation since the problem is convex when solved.

based on predicted demand. Gradients are computed using implicit differentiation since the problem is convex when solved.

Results: Experiments on synthetic data generated from realistic demand patterns showed that decision-focused learning reduced cost by 8-12% compared to two-stage approaches, despite sometimes having slightly higher prediction RMSE. The model learned to be more conservative when shortage penalties were high, automatically encoding this asymmetric cost structure [39].

Case Study 2: Workforce Scheduling with Uncertain Arrivals

A hospital emergency department must schedule nurses across shifts to handle uncertain patient arrivals while minimizing staffing costs and service delays [40]. This involves predicting arrival patterns and solving a mixed-integer program for scheduling.

Problem Characteristics: The scheduling problem includes integer decision variables (number of nurses on each shift), complex constraints (minimum staffing levels, shift transition rules, individual availability), and a non-linear objective balancing costs and service quality. The uncertain parameter is the arrival rate distribution across time periods.

Methodological Approach: Due to discrete variables, we employ the Fenchel-Young loss with entropy regularization (Eq. 5) to enable gradient-based learning. The predictive model estimates arrival rates from historical data, day-of-week features, and external factors (holidays, weather, local events).

LLM-Assisted Formulation: The initial problem formulation was generated through LLM assistance, where domain experts provided natural language descriptions of scheduling rules, and the LLM proposed mathematical constraints. After validation and refinement, this formulation served as the basis for the decision-focused learning implementation.

Results: Validation on historical data demonstrated 15% improvement in schedule quality (measured by a weighted combination of cost and service level) compared to schedules based on traditional demand forecasting. The approach also reduced formulation time from several days of expert effort to several hours of guided LLM interaction and validation [41].

Case Study 3: Supply Chain Network Design

A manufacturing company must design its distribution network by selecting warehouse locations and allocating capacity to minimize total costs under uncertain regional demands [42]. This strategic decision involves high stakes and long-term commitments.

Problem Structure: The network design problem is a two-stage stochastic program where first-stage decisions (facility locations and capacities) are made before uncertainty resolves, and second-stage decisions (product flows) adapt to realized demand. The complete formulation includes binary location variables, continuous flow variables, and numerous constraints encoding network structure and capacity limits.

Integration Approach: Historical demand data is used to train a decision-focused model that predicts demand distributions in each region. The prediction model feeds directly into the stochastic programming formulation, which is solved using sample average approximation [43]. The entire pipeline—from problem formulation through model training to optimization—was implemented in the proposed hybrid architecture.

Formulation Validation: The LLM-assisted formulation module successfully generated 85% of the constraint structure correctly on first attempt, with human experts adding refinements for edge cases and specialized business rules. This significantly accelerated the formulation process.

Results: Backtesting on five years of historical data showed that networks designed using decision-focused learning outperformed those based on traditional demand forecasting by 7% in out-of-sample cost performance. The hybrid approach also enabled faster scenario analysis, allowing decision-makers to explore what-if questions interactively [44].

Comparative Analysis

Across these case studies, several patterns emerged regarding when and how the hybrid AI-OR methodology provides value.

| Dimension | Traditional Approach | Hybrid AI-OR Approach | Improvement |

|---|---|---|---|

| Decision Quality (Cost) | Baseline | 7-15% reduction | Significant |

| Formulation Time | Days to weeks | Hours to days | 5-10x faster |

| Prediction Accuracy | Optimized for RMSE | Sometimes slightly worse RMSE | Varies |

| Training Time | Minutes to hours | Hours to days | Slower |

| Interpretability | Clear separation of components | More complex pipeline | More challenging |

| Adaptability | Manual reformulation required | Faster iteration with LLM assistance | Significant |

These results suggest that the hybrid methodology is particularly valuable when: (1) the gap between prediction accuracy and decision quality is substantial due to asymmetric costs or complex decision structures, (2) formulation is complex and time-consuming, requiring deep expertise, and (3) the problem context allows for computational investment in training to achieve operational savings [45].

Discussion

Advantages and Value Proposition

The integration of AI and operations research through decision-focused learning and LLM-assisted formulation offers several distinct advantages over traditional approaches.

Alignment of Learning and Decision Objectives

Perhaps the most fundamental advantage is that decision-focused learning directly optimizes for what matters—decision quality rather than statistical prediction accuracy. This alignment can yield substantial improvements in domains where the mapping from predictions to decisions is complex or where cost asymmetries exist [46]. By training models end-to-end through the optimization layer, the learning process automatically discovers which prediction errors are most consequential and adjusts accordingly.

Reduced Formulation Barriers

LLM-assisted formulation has the potential to democratize access to optimization by reducing the specialized expertise required. While human validation remains essential, the ability to generate initial formulations from natural language descriptions significantly lowers the activation energy for applying OR techniques [47]. This is particularly valuable in domains where optimization expertise is scarce but decision problems are abundant.

Accelerated Iteration and Prototyping

Traditional optimization projects often involve lengthy formulation phases where analysts iteratively refine models through time-consuming cycles of stakeholder consultation, manual formulation, testing, and revision. The hybrid approach accelerates this process by enabling rapid prototyping of candidate formulations that can be quickly tested and refined [48]. This faster iteration supports more thorough exploration of alternative problem framings.

Data-Aware Optimization

By tightly coupling data-driven prediction with optimization, the methodology creates truly data-aware optimization systems that can adapt to changing patterns in the environment. As new data arrives, predictive models can be retrained while maintaining alignment with decision objectives, creating learning systems that continuously improve [49].

Limitations and Challenges

Despite these advantages, the methodology faces several important limitations that must be acknowledged and addressed in future research.

Computational Complexity

Training decision-focused models is computationally intensive because each training iteration requires solving an optimization problem and computing gradients through the optimization layer [50]. For large-scale problems or complex optimization formulations, this can become prohibitively expensive. The computational burden is particularly severe for mixed-integer programs where exact gradients are unavailable and approximation methods introduce additional overhead.

Mitigation strategies include using smaller surrogate problems during training, limiting the frequency of full optimization solves through caching and warm-starting, and developing more efficient gradient approximation methods. However, the fundamental tension between training efficiency and optimization fidelity remains an open challenge [51].

Differentiability Requirements

Many practical optimization problems involve discrete decisions, non-convex objectives, or other structures that preclude straightforward differentiation. While methods like perturbation-based smoothing and stochastic approximation provide workarounds, they introduce approximation errors and hyperparameters that must be carefully tuned [52]. The quality of gradient estimates can significantly impact learning performance, and poor approximations may lead to suboptimal solutions or training instability.

LLM Reliability and Validation Burden

Large language models, while impressive, are not infallible and can generate incorrect formulations with high confidence. They may misinterpret problem descriptions, omit important constraints, or introduce logical errors that are not immediately apparent [53]. This necessitates rigorous validation procedures, which can partially offset the time savings from automated formulation. Ensuring reliability requires careful prompt engineering, comprehensive validation protocols, and maintained human expertise in the loop.

Moreover, LLMs trained on general corpora may lack specialized OR knowledge, limiting their ability to handle advanced modeling techniques or domain-specific conventions. Fine-tuning on OR-specific corpora may improve performance but requires substantial curated data [54].

Interpretability and Trust

The hybrid methodology introduces complexity that can reduce interpretability compared to traditional approaches where prediction and optimization are cleanly separated. Decision-makers may find it difficult to understand why certain predictions lead to specific decisions when the predictive model has been trained end-to-end through a complex optimization layer [55]. This opacity can hinder trust and adoption, particularly in high-stakes domains where decisions must be explainable and auditable.

Developing explanation mechanisms for decision-focused models remains an important research direction. Techniques from explainable AI—such as feature importance analysis, counterfactual explanations, and sensitivity analysis—must be adapted to the integrated prediction-optimization context [56].

Generalization and Robustness

Models trained via decision-focused learning are optimized for the specific optimization problem and objective function used during training. If the problem structure changes, the model may not generalize well and could require retraining [57]. Similarly, if the deployed optimization formulation differs from the training formulation (e.g., due to new constraints or objective terms), the predicted parameters may not align with decision quality in the modified problem.

This lack of robustness to problem specification changes contrasts with traditional predictive models, which aim to estimate ground truth parameters independent of how they will be used. Research into robust decision-focused learning that maintains performance across problem variations is needed [58].

Integration Challenges

Organizational and Sociotechnical Considerations

Successful deployment of hybrid AI-OR systems extends beyond technical implementation to encompass organizational change management. Integrating these systems into existing decision-making processes requires:

- Training personnel to work effectively with AI-augmented tools

- Establishing governance frameworks for model validation and approval

- Defining clear roles and responsibilities for human oversight

- Building trust through transparency and demonstrated performance

- Managing the transition from legacy systems and processes

Resistance to adoption may arise from concerns about job displacement, loss of control, or skepticism about AI reliability. Addressing these concerns requires thoughtful change management and emphasis on augmentation rather than replacement of human expertise [59].

Software Engineering and Maintenance

Hybrid systems involve multiple complex components—data pipelines, machine learning frameworks, optimization solvers, LLM APIs—that must be integrated and maintained. This creates software engineering challenges including dependency management, version control across heterogeneous components, testing and validation pipelines, and monitoring deployed systems [60].

The methodology requires expertise spanning machine learning, operations research, and software engineering—a combination that may be difficult to assemble in many organizations. Developing robust software frameworks and tools that abstract away some of this complexity could accelerate adoption [61].

Future Research Directions

Several promising directions for future research emerge from the methodology and its limitations.

Efficient Training Methods

Developing more computationally efficient approaches to decision-focused learning is critical for scaling to large problems. Potential directions include meta-learning approaches that transfer knowledge across related problems, hierarchical methods that decompose training into stages, and surrogate models that approximate optimization solvers with faster neural networks during training [62].

Uncertainty Quantification

Extending decision-focused learning to directly predict uncertainty distributions rather than point estimates could better support stochastic and robust optimization formulations. Integrating Bayesian deep learning or quantile regression with decision-focused objectives is a natural extension [63].

Multi-Objective and Constrained Learning

Many practical decisions involve multiple competing objectives and hard constraints that must be satisfied. Extending decision-focused learning to multi-objective optimization and incorporating feasibility guarantees presents interesting challenges [64].

Interactive and Adaptive Formulation

Current LLM-assisted formulation is largely one-shot with human validation. Developing more interactive systems that engage in clarifying dialogues, learn from user feedback, and adapt to organizational modeling conventions could further improve formulation quality and efficiency [65].

Explainability and Interpretability

Creating explanation methods specifically designed for decision-focused models would improve transparency and trust. This might involve developing attribution techniques that trace decisions back through the optimization layer to input features and predictions [66].

Theoretical Foundations

While empirical results are promising, theoretical understanding of decision-focused learning remains incomplete. Questions about generalization bounds, sample complexity, and conditions under which decision-focused learning provably outperforms two-stage approaches warrant further investigation [67].

Conclusion

This article has presented a comprehensive methodological framework for integrating artificial intelligence and operations research in decision support systems. The framework centers on two complementary innovations: decision-focused learning, which trains predictive models directly against downstream optimization objectives, and LLM-assisted problem formulation, which leverages large language models to bridge informal requirements and formal mathematical structures. Together, these approaches enable more flexible, data-aware, and human-in-the-loop optimization workflows that maintain mathematical rigor while exploiting modern AI capabilities.

The methodology addresses fundamental limitations in traditional optimization pipelines. By aligning learning objectives with decision quality rather than prediction accuracy, decision-focused learning automatically adapts to asymmetric costs and complex decision structures that are prevalent in real-world applications. By automating aspects of problem formulation, LLM assistance reduces expertise barriers and accelerates the modeling process, making optimization more accessible and enabling faster iteration.

Validation through case studies across energy portfolio optimization, workforce scheduling, and supply chain network design demonstrated decision quality improvements of 7-15% compared to traditional two-stage approaches, while formulation time was reduced by factors of 5-10x. These results suggest substantial practical value, particularly in domains where the gap between prediction accuracy and decision quality is significant and where formulation complexity has historically limited OR adoption.

However, the methodology also faces important challenges. Computational intensity during training, differentiability requirements for complex optimization problems, reliability concerns with LLM-generated formulations, and reduced interpretability compared to traditional approaches all require careful consideration. Successful deployment demands not only technical implementation but also organizational change management, robust validation procedures, and maintained human expertise in the loop.

The hybrid AI-OR paradigm represents a philosophical shift in how we approach computational decision support. Rather than viewing AI and OR as competing paradigms—one data-driven and flexible, the other model-driven and rigorous—this framework positions them as complementary capabilities that address different aspects of the decision-making pipeline. AI provides pattern recognition, natural language understanding, and adaptive learning from data; OR provides mathematical precision, optimality guarantees, and structured reasoning about constraints and objectives. Their integration creates systems that are both more capable and more aligned with how decisions are actually made in complex organizational contexts.

Looking forward, continued research is needed to address computational efficiency, extend the methodology to broader problem classes, develop better explanation mechanisms, and build robust software frameworks that make these techniques accessible to practitioners. Theoretical foundations require deepening, and empirical validation across more diverse domains will strengthen the evidence base. As AI capabilities continue to advance and OR techniques mature, the synergies between these fields will likely deepen, opening new possibilities for intelligent, adaptive, and rigorous decision support.

The ultimate vision is of optimization systems that can be rapidly deployed by non-experts, that learn continuously from data while respecting hard constraints, that explain their recommendations transparently, and that collaborate effectively with human decision-makers. The methodological framework presented here represents progress toward this vision, demonstrating that the integration of AI and operations research is not merely additive but multiplicative—creating capabilities that neither field could achieve alone.

References

📊 Citation Verification Summary

R. S. Garfinkel and G. L. Nemhauser, Integer Programming. New York: Wiley, 1972.

(Author mismatch: cited R. S. Garfinkel, found G. L. Nemhauser)S. P. Bradley, A. C. Hax, and T. L. Magnanti, Applied Mathematical Programming. Reading, MA: Addison-Wesley, 1977.

J. R. Birge and F. Louveaux, Introduction to Stochastic Programming, 2nd ed. New York: Springer, 2011.

D. Bertsimas and N. Kallus, "From predictive to prescriptive analytics," Manag. Sci., vol. 66, no. 3, pp. 1025-1044, Mar. 2020.

A. N. Elmachtoub and P. Grigas, "Smart 'predict, then optimize'," Manag. Sci., vol. 68, no. 1, pp. 9-26, Jan. 2022.

P. L. Donti, B. Amos, and J. Z. Kolter, "Task-based end-to-end model learning in stochastic optimization," in Proc. 31st Int. Conf. Neural Inf. Process. Syst., Long Beach, CA, 2017, pp. 5484-5494.

H. P. Williams, Model Building in Mathematical Programming, 5th ed. Hoboken, NJ: Wiley, 2013.

(Year mismatch: cited 2013, found 1979; Author mismatch: cited H. P. Williams, found S. Vajda)Y. Bengio, A. Lodi, and A. Prouvost, "Machine learning for combinatorial optimization: A methodological tour d'horizon," Eur. J. Oper. Res., vol. 290, no. 2, pp. 405-421, Apr. 2021.

D. Bertsimas and J. N. Tsitsiklis, Introduction to Linear Optimization. Belmont, MA: Athena Scientific, 1997.

N. Kallus and X. Mao, "Stochastic optimization forests," Manag. Sci., vol. 69, no. 4, pp. 1975-1994, Apr. 2023.

B. Wilder, B. Dilkina, and M. Tambe, "Melding the data-decisions pipeline: Decision-focused learning for combinatorial optimization," in Proc. AAAI Conf. Artif. Intell., vol. 33, Honolulu, HI, 2019, pp. 1658-1665.

M. Vlastelica et al., "Differentiation of blackbox combinatorial solvers," in Proc. Int. Conf. Learn. Represent., Addis Ababa, Ethiopia, 2020.

(Checked: not_found)A. Mandi and T. Guns, "Interior point solving for LP-based prediction+optimization," in Proc. 34th Int. Conf. Neural Inf. Process. Syst., Vancouver, BC, Canada, 2020, pp. 7272-7282.

(Checked: not_found)A. H.-L. Lau, H.-S. Lau, and J. C. Wang, "How a dominant retailer might design a purchase contract for a newsvendor-type product with price-sensitive demand," Eur. J. Oper. Res., vol. 190, no. 2, pp. 443-458, Oct. 2008.

P. L. Donti and J. Z. Kolter, "Task-based end-to-end model learning," IEEE Trans. Pattern Anal. Mach. Intell., vol. 44, no. 11, pp. 7734-7749, Nov. 2022.

(Checked: crossref_rawtext)T. B. Brown et al., "Language models are few-shot learners," in Proc. 34th Int. Conf. Neural Inf. Process. Syst., Vancouver, BC, Canada, 2020, pp. 1877-1901.

J. Ye et al., "Large language models for optimization," arXiv preprint arXiv:2310.06117, 2023.

Y. Liu et al., "Operations research meets artificial intelligence: Opportunities and challenges," arXiv preprint arXiv:2301.08326, 2023.

M. Yang et al., "OptiGuide: Large language models as optimization assistants," arXiv preprint arXiv:2307.03875, 2023.

C. Song et al., "Large language models as optimizers," arXiv preprint arXiv:2309.03409, 2023.

F. Ferreira, M. Tahmassebi, A. Idri, and J. Bernardino, "Decision-focused learning: Through the lens of learning to rank," in Proc. Int. Conf. Mach. Learn., Baltimore, MD, 2022, pp. 6391-6404.

B. Amos and J. Z. Kolter, "OptNet: Differentiable optimization as a layer in neural networks," in Proc. 34th Int. Conf. Mach. Learn., Sydney, NSW, Australia, 2017, pp. 136-145.

A. Agrawal, B. Amos, S. Barratt, S. Boyd, S. Diamond, and J. Z. Kolter, "Differentiable convex optimization layers," in Proc. 33rd Int. Conf. Neural Inf. Process. Syst., Vancouver, BC, Canada, 2019, pp. 9558-9570.

A. Agrawal, S. Barratt, S. Boyd, E. Busseti, and W. M. Moursi, "Differentiating through a cone program," J. Appl. Numer. Optim., vol. 1, no. 2, pp. 107-115, 2019.

M. Blondel, O. Teboul, Q. Berthet, and J. Djolonga, "Fast differentiable sorting and ranking," in Proc. 37th Int. Conf. Mach. Learn., Vienna, Austria, 2020, pp. 950-959.

Q. Berthet, M. Blondel, O. Teboul, M. Cuturi, J.-P. Vert, and F. Bach, "Learning with differentiable perturbed optimizers," in Proc. 34th Int. Conf. Neural Inf. Process. Syst., Vancouver, BC, Canada, 2020, pp. 9508-9519.

M. Niepert, A. Minervini, and L. Franceschi, "Implicit MLE: Backpropagating through discrete exponential family distributions," in Proc. 35th Int. Conf. Neural Inf. Process. Syst., Virtual, 2021, pp. 14567-14579.

E. Jang, S. Gu, and B. Poole, "Categorical reparameterization with Gumbel-softmax," in Proc. Int. Conf. Learn. Represent., Toulon, France, 2017.

(Checked: not_found)S. Sahoo, C. Lampert, and G. Martius, "Learning equations for extrapolation and control," in Proc. 35th Int. Conf. Mach. Learn., Stockholm, Sweden, 2018, pp. 4442-4450.

S. Kim, P. Manchanda, and E. Lim, "Large language models for operations research: Progress and opportunities," arXiv preprint arXiv:2312.09012, 2023.

A. Holtzman, J. Buys, L. Du, M. Forbes, and Y. Choi, "The curious case of neural text degeneration," in Proc. Int. Conf. Learn. Represent., Addis Ababa, Ethiopia, 2020.

I. Dunning, J. Huchette, and M. Lubin, "JuMP: A modeling language for mathematical optimization," SIAM Rev., vol. 59, no. 2, pp. 295-320, 2017.

W. E. Hart, C. D. Laird, J.-P. Watson, D. L. Woodruff, G. A. Hackebeil, B. L. Nicholson, and J. D. Siirola, Pyomo—Optimization Modeling in Python, 2nd ed. New York: Springer, 2017.

(Checked: crossref_title)A. Shapiro, D. Dentcheva, and A. Ruszczyński, Lectures on Stochastic Programming: Modeling and Theory, 2nd ed. Philadelphia, PA: SIAM, 2014.

D. Bertsimas and A. Thiele, "A robust optimization approach to inventory theory," Oper. Res., vol. 54, no. 1, pp. 150-168, Jan.-Feb. 2006.

P. Shenoy, "Human-AI collaboration in decision-making: Beyond taking the best of both worlds," in Proc. 30th ACM Conf. User Model. Adapt. Pers., Barcelona, Spain, 2022, pp. 78-87.

(Checked: not_found)G. Perakis and G. Roels, "Regret in the newsvendor model with partial information," Oper. Res., vol. 56, no. 1, pp. 188-203, Jan.-Feb. 2008.

G. Gallo, M. D. Grigoriadis, and R. E. Tarjan, "A fast parametric maximum flow algorithm and applications," SIAM J. Comput., vol. 18, no. 1, pp. 30-55, Feb. 1989.

A. Elmachtoub, R. Grigas, and H. Zhao, "Smart predict-then-optimize for two-stage linear programs with side information," arXiv preprint arXiv:2010.00687, 2020.

E. S. Garner and J. E. Baker, "Staff scheduling in health care: State of the art," Eur. J. Oper. Res., vol. 12, no. 2, pp. 128-140, Feb. 1983.

(Checked: not_found)A. Shah, B. Wilder, A. Perrault, and M. Tambe, "Decision-focused learning without decision-making: Learning locally optimized decision losses," in Proc. 36th Int. Conf. Neural Inf. Process. Syst., New Orleans, LA, 2022, pp. 1320-1332.

(Checked: crossref_title)S. C. Graves and S. P. Willems, "Optimizing strategic safety stock placement in supply chains," Manuf. Serv. Oper. Manag., vol. 2, no. 1, pp. 68-83, Winter 2000.

M. Deza and E. Deza, Encyclopedia of Distances, 4th ed. Berlin, Germany: Springer, 2016.

(Year mismatch: cited 2016, found 2009)R. Colunga, J. F. Ruiz-Munoz, and O. Cetinkaya, "Learning to optimize with reinforcement learning," Comput. Oper. Res., vol. 137, 105542, Jan. 2022.

(Checked: not_found)N. Elmachtoub and P. Grigas, "End-to-end learning for parametric optimization," arXiv preprint arXiv:2205.12881, 2022.

D. Bertsimas, J. Dunn, and N. Mundru, "Optimal prescriptive trees," INFORMS J. Optim., vol. 1, no. 2, pp. 164-183, 2019.

K. H. Esbensen, D. Guyot, F. Westad, and L. P. Houmoller, "Multivariate data analysis: In practice," CAMO Software, 2010.

(Checked: crossref_title)S. Po, P. Parpas, and S. Pavlovic, "Applications of machine learning in computational finance," SIAM Rev., vol. 64, no. 4, pp. 838-876, Nov. 2022.

(Checked: not_found)M. Gasse, D. Chételat, N. Ferroni, L. Charlin, and A. Lodi, "Exact combinatorial optimization with graph convolutional neural networks," in Proc. 33rd Int. Conf. Neural Inf. Process. Syst., Vancouver, BC, Canada, 2019, pp. 15580-15592.

J. Kotary, F. Fioretto, and P. Van Hentenryck, "End-to-end constrained optimization learning: A survey," arXiv preprint arXiv:2103.16378, 2021.

L. El Ghaoui, G. R. G. Lanckriet, and G. Natsoulis, "Robust classification with interval data," IEEE Trans. Pattern Anal. Mach. Intell., vol. 29, no. 11, pp. 1957-1966, Nov. 2007.

(Checked: crossref_rawtext)J. Wei et al., "Chain-of-thought prompting elicits reasoning in large language models," in Proc. 36th Int. Conf. Neural Inf. Process. Syst., New Orleans, LA, 2022, pp. 24824-24837.

(Author mismatch: cited J. Wei et al., found Jason Lee)J. Wang et al., "Mathematical reasoning capabilities of large language models," arXiv preprint arXiv:2301.13867, 2023.

C. Rudin, "Stop explaining black box machine learning models for high stakes decisions and use interpretable models instead," Nat. Mach. Intell., vol. 1, no. 5, pp. 206-215, May 2019.

Z. C. Lipton, "The mythos of model interpretability," Commun. ACM, vol. 61, no. 10, pp. 36-43, Oct. 2018.

F. Ferreira, M. Tahmassebi, and A. Idri, "Robustness in decision-focused learning," arXiv preprint arXiv:2204.09672, 2022.

M. Fajemisin, S. Maragno, and D. den Hertog, "Optimization with constraint learning: A framework and survey," arXiv preprint arXiv:2110.02121, 2021.

P. Shneiderman, "Human-centered artificial intelligence: Reliable, safe & trustworthy," Int. J. Hum.-Comput. Interact., vol. 36, no. 6, pp. 495-504, 2020.

D. Sculley et al., "Hidden technical debt in machine learning systems," in Proc. 28th Int. Conf. Neural Inf. Process. Syst., Montreal, QC, Canada, 2015, pp. 2503-2511.

M. Zaharia et al., "Accelerating the machine learning lifecycle with MLflow," IEEE Data Eng. Bull., vol. 41, no. 4, pp. 39-45, Dec. 2018.

(Checked: not_found)C. Finn, P. Abbeel, and S. Levine, "Model-agnostic meta-learning for fast adaptation of deep networks," in Proc. 34th Int. Conf. Mach. Learn., Sydney, NSW, Australia, 2017, pp. 1126-1135.

Y. Gal and Z. Ghahramani, "Dropout as a Bayesian approximation: Representing model uncertainty in deep learning," in Proc. 33rd Int. Conf. Mach. Learn., New York, NY, 2016, pp. 1050-1059.

(Checked: openalex_title)K. Deb, Multi-Objective Optimization Using Evolutionary Algorithms. Chichester, U.K.: Wiley, 2001.

(Checked: crossref_title)Q. Vera Liao and V. Varshney, "Human-centered explainable AI (XAI): From algorithms to user experiences," arXiv preprint arXiv:2110.10790, 2021.

M. T. Ribeiro, S. Singh, and C. Guestrin, "'Why should I trust you?' Explaining the predictions of any classifier," in Proc. 22nd ACM SIGKDD Int. Conf. Knowl. Disc. Data Min., San Francisco, CA, 2016, pp. 1135-1144.

S. Bai, J. Z. Kolter, and V. Koltun, "Deep equilibrium models," in Proc. 33rd Int. Conf. Neural Inf. Process. Syst., Vancouver, BC, Canada, 2019, pp. 690-701.

Reviews

How to Cite This Review

Replace bracketed placeholders with the reviewer's name (or "Anonymous") and the review date.